In the

last chapter, we looked at the basics of process or task in Linux kernel and with a brief overview of

struct task_struct. Now we are going to discuss how the process or task gets created.

In Linux, a new task can be created using

fork() system call.

fork() call creates an almost exact copy of the parent process. The differences are in

pid (unique), and parent pointer. It creates an exact copy of task descriptor, and resource utilization limit (set to 0, initially). But there are a lot of parameters in the task descriptor that must not be copied to child. So the forking operation

is implemented in the kernel using

clone() system call, which in turn calls

do_fork() system call in

kernel/fork.c.

do_fork() predominantly uses an unsigned long variable called clone_flag to determine what parameters need to be shared or copied. The first call made by

do_fork() is

copy_process(), which has the following code:

retval = security_task_create(clone_flags);

The first step of

copy_process() is to call

security_task_create() implemented in

security/security.c. In this function, a structure called

struct security_operations is used. This function takes

clone_flags as input and determines if the current process has sufficient permission to create a child process. This is done by calling

selinux_task_create() function in

security/selinux/hooks.c, which also has a function

current_has_perm() that takes

clone_flag and check for several permissions using

access vector cache - a component that provides caching of access decision computation in order to avoid doing it over and over again. If

security_task_create() returns 0,

copy_process() cannot create the new task.

p = dup_task_struct(current);

ftrace_graph_init_task(p);

rt_mutex_init_task(p);

retval = copy_creds(p, clone_flags);

The next step is to duplicate the

struct task_struct by calling

dup_task_struct(). Once the

struct task_struct is duplicated, the values of the pointers to parent tasks that do not make sense in the child are set to appropriate values as explained in the subsequent paragraph. This is followed by a call to

copy_creds(), implemented in

kernel/creds.c. The

copy_creds() copies the credential of parent to the child and at this point a new

clone_flag parameter called

CLONE_THREAD has to be introduced.

In Linux, there is no differentiation between threads and tasks (or processes). They are both created and destroyed in the same way, but handled a little differently.

CLONE_THREAD flag says that the child has to be placed in the same group as the parent. The parent of the child process in this case is the same as the parent of the task that called

clone() and not the task that called

clone() itself. The process and session keyrings (handled later) are shared between all the threads in a process. This explains why

struct task_struct has two pointers, namely

real_parent and

parent. In case of

CLONE_THREAD flag set,

real_parent points to the task that invoked the

clone(), but beyond that this "real parent" does not have any control over the newly created task. The parent of the newly created task is marked as the task that created all these threads.

getppid() system call brings that parent process and only the parent process gets

SIGCHLD on termination of child, if it makes

wait() system call. So the

copy_creds() has to copy the credentials and keyrings of the common parent (not the real parent) for all these threads. So threads in Linux are processes that share parent and user space. Note that the thread we are talking about here are user threads that any program creates using

pthread or

zthread libraries. They are completely different from kernel threads created by Linux kernel for its internal operations.

After this, the function takes care of setting the CPU time utilized to zero and splitting the total CPU time from parent to give it to the child. Then

sched_fork() function defined in

kernel/sched.c is invoked. Process scheduling is a separate topic of discussion.

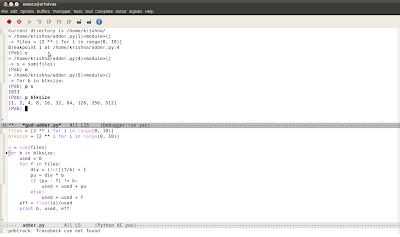

In this chapter, we looked at how a task is created in Linux kernel or rather what important operations are performed during task creation. In the next chapter, we will see task termination. In the meantime, you can put

printk() statements in

copy_process() function of

kernel/fork.c and check out the kernel log and observe its working.